There's a lot of hype surrounding Windows 10 right now and I'm not sure why. I notice several problems and no real benefits. Everyone is saying to just upgrade, but I don't feel like upgrading to something that is hugely inefficient and spies on me. If anyone has a good reason to upgrade, let me know in the comments.

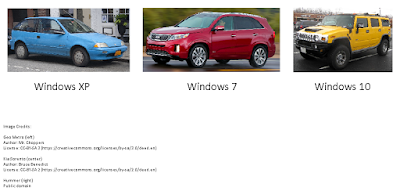

If Windows versions were cars:

Why do Windows and Office get bigger and slower with each release? Windows XP came on a CD and a typical installation could fit in a couple of gigabytes, let's say just under 2 GiB. Windows Vista and up come on DVD's and a typical installation takes about 15 GiB. Why is that? I use Windows 7 and it works very well for me but I don't know what it could possibly have that would take up an extra 13 GiB compared to Windows XP.

Upgrading past Windows 8.1 just doesn't make sense in my opinion. More importantly, we all know Windows 10 sends usage data to Microsoft. How much is it sending? We probably won't know until there's a data breach and it ends up in the news.

Another thing to ask is, why was Windows 10 free? And why did the free offer last so long past the deadline? I was able to activate Windows 10 using my Windows 7 COA in April 2017, long after that was supposed to stop working. An

article on howtogeek.com says this still works as of January 5, 2018. They write, "it’s also possible Microsoft will look the other way and keep this trick around to encourage more Windows 10 upgrades for a long time to come."

At first I thought it was just a way to get free beta testers, but I suspect it also has to do with the data collection. If something is free then you're (usually) the product. I hear that people are quitting Facebook because of privacy and data collection issues, but what if using Windows 10 is just as bad as using Facebook, or even worse? Windows 10 could provide far more data than any social network since it can potentially watch everything you do.

The biggest problem with Windows 10 may not even be the data collection, but instead the forced updates. It almost feels like Microsoft owns any computer running Windows 10 and they're just letting you use it. I heard that they're also moving to a new driver signing system where you have to pay to get kernel-mode drivers signed before Windows 10 can use them. This reminds me of how Blackberry phones won't run native C++ apps unless they're signed by a Blackberry-issued certificate, even for development. Thankfully Blackberry's signing process is free, but it's still not cool to have to get permission to run things on your own devices. Windows should not be as locked-down as iPhone and Blackberry.

Recently a family member bought a laptop from Wal-Mart but after I explained how bad Windows 10 is, they took my suggestion to migrate their old laptop's Windows 8.1 license. After I installed Windows 8.1, I found out that many of the new laptop's devices only had drivers for Windows 10. It seemed odd since Windows 8.1 isn't that old. I've never seen a hardware manufacturer support only one version of Windows, but that was how it was for this laptop.

I tried everything, including unzipping the installers and attempting to manually install the drivers, but nothing worked. It turns out that Intel, the company that made the laptop's CPU, graphics, and Wifi, doesn't want to support anything below Windows 10. I can almost understand with the GPU and Wifi, but since when is an x86 CPU intended for only one version of Windows? In the end, I was asked to just install Linux. I set up Ubuntu and now everything works like it should, without the data collection.

I've decided to keep using older Windows versions as long as possible. I use Windows 7 on my desktop and XP on my laptop. And yes, my laptop is online. I also dual-boot my desktop with Windows XP so I can use an old analog video capture card.

I really like Windows XP because of its simple interface and low requirements. Everyone seems to freak out when they hear of people keeping an XP system on the Internet. I know about viruses like WannaCry, but as I understand it, they depend on someone to run them before than can infect a LAN. I'm behind a firewall and I'm always careful about what I download so I don't see a problem yet.

I'm not saying Windows XP is for everyone. In fact, I only recommend it if you're a power user who can secure it properly. Even then, it's probably better to use Windows 7 since it has better software support.

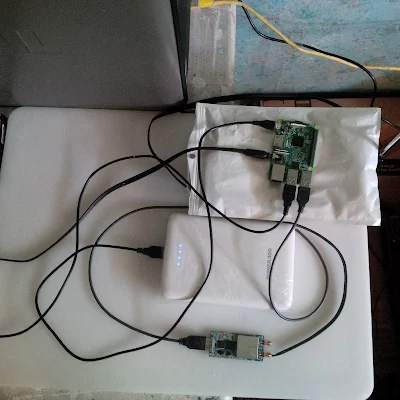

I can see that Microsoft, Intel, and probably others are planning to push everyone they can onto Windows 10. To prepare, I've archived my Windows ISO's on an external hard drive and downloaded a large collection of offline update installers. In fact, as I write this, I'm downloading all the updates for Windows Server 2003, XP, 7, 8, and 8.1, and Office 2003 and 2007.

Here are some OS options if, like me, you want to avoid Windows 10.

Windows 7

If possible, I'd say to stay with Windows 7 as long as possible unless you're using a tablet, in which case I think Windows 8.1 is best.

You should acquire and archive Windows 7 installation media and updates now, just in case Microsoft tries to kill this version later. After I installed Windows 7 and activated it, I used DriveImage XML to make a drive image so I can revert to a fresh installation whenever I need to.

I can see Windows 7 being supported by software and most hardware for a long time so this should be a good option for quite a while.

Ubuntu or Lubuntu

There are many apps that run on Linux such as Chrome, Opera, LibreOffice, 4K Video Downloader, and Veracrypt, so switching to Linux might work for you. It might be your only other option if you get a new computer.

Windows 8.1 (best for tablets, not PC's)

I know many people disagree, but I think Windows 8.1 is great for tablets. Unfortunately, the Windows 8 app store seems to be losing apps rapidly. If you're interested, you should acquire and archive Windows 8 installation media.

Windows XP

I use this every day on my laptop and it works well for me. It has decent software and hardware support but be prepared for some recent software to not work. I'll probably publish and maintain a list of XP-compatible software and hardware in the future.

Windows XP x64 Edition (not really recommended)

Recently I heard about Windows XP x64 Edition. It sounded interesting so I exercised my Windows 7 Pro downgrade rights (pictured below) to install a copy in a VM and on a laptop. (Don't worry, I had enough spare Windows 7 licenses to cover this)

Above: an excerpt from the Windows 7 Pro license agreement.

It looked and acted just like regular 32-bit Windows XP, and I was surprised at how many current 64-bit apps run on it. The downside is that it requires special drivers. I've observed some drivers to work in 32-bit Windows 7 and XP, but 64-bit Windows 7 and up cannot share drivers with 64-bit Windows XP. It turns out that 64-bit Windows XP uses a different driver model, one that it shares with Windows Server 2003 x64. Because 64-bit Windows XP is so rare, it's hard to find drivers for it but I think searching for drivers for Windows Server 2003 x64 might help. I was able to find a 64-bit XP driver for my GTX 750 Ti, but some Sandisk flash drives don't work.

As for compatibility, I can confirm that the following items work in 64-bit Windows XP:

- Seagate's 5 TB GPT-formatted external hard drive

- K-Lite Codec Pack, including Media Player Classic 64-bit

- UltraSearch x64

- Chromium 44 x64