Pixels and color storage methods

Up until now, I only said that image data is not RGB, but rather YUV. But that doesn't tell you anything about how it's stored on the computer. The image, as you know, is 960x540 pixels. That means 960 pixels wide by 540 pixels high. When it was taken by the camera and when it's shown on your screen, it is merely a 2-dimensional array of pixels. It was not subdivided because it's just a plain image, not yet encoded with VP9.Let's zoom in so you can get an idea of the individual pixels in the B/W image.

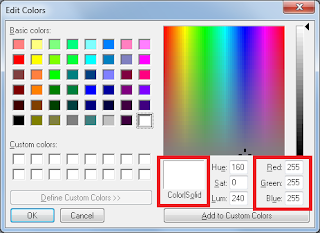

If you use the eyedropper tool in MS Paint, you can pick up a color from an image. You can see just what that color's RGB value is by going into Edit Colors.

As you can see, white is 255, 255, 255. As we discussed earlier, pixel values in VP9 follow a similar system but with YUV. Shades of gray, which is what the B/W YUV image is made up of, can be made in RGB by setting all three numbers to the same value.

Most importantly, why do you think MS Paint is limited to 255? It's because colors on a computer are almost always 8 bits (bits means 0's and 1's) per channel. On a computer screen, channels are RGB, hence MS Paint's use of RGB instead of YUV.

If you have 8 bit fields capable of holding 0 or 1, you can think of it as a row of light switches. How many combinations of On and Off can you come up with by flipping 8 light switches? The answer is 2 raised to the 8th power, or 256. That's because you have 2 states (On/Off) and you have 8 places that can be set to either state. But if you have 10 light switches, that would be 2 to the 10th, or 1024.

You can't reach 256 with 8 bits because 256 is how many states you have. One of them is 0. It would be like having 60 minutes in an hour but ending each hour on a clock at 60 instead of 59.

In this tutorial we will be dealing with 10-bit pixels, capable of ranging in value from 0 to 1023 instead of the usual 8-bit values you may be familiar with that range from 0-255. This is because it's a proven fact that 10-bit video carries higher quality at a given bitrate than 8-bit video. I'm not joking when I say that a 10-bit 1080p video at 1 Mbps can actually look a lot better than an 8-bit 1080p video at 2 Mbps.

Understanding RAW image data

You may have heard of RAW images taken by expensive DSLR cameras, but that's not what we're talking about here. In video, RAW data is a bit different.

A common format for RAW video data is YUV420p. 8 bits per value is implied because a bit depth wasn't specified. You can export to this format using a tool called FFmpeg. I'll describe how YUV420p files are structured before we move on because although we won't be using YUV420p, we will be using YUV420p10LE which is quite similar but more complicated.

In a RAW YUV420p video file, each frame is stored one after the other with no separators. It is simply the Y (B/W image), U and V. Then it repeats for the next frame.

You're probably familiar with the fact that computer data is stored as bytes, and that bytes are 8 bits each. Therefore, a byte can represent any number from 0 to 255. In a readable text file, each letter or other character is simply a number (usually less than 128) that your text reader knows corresponds to a letter or character. But if you've ever opened an image or program in a text editor, you know that they contain weird random characters. That's because the bytes in the file are storing numbers that don't correspond to readable characters.

(A PNG file opened in Notepad)

In a YUV420p video, we first store the B/W image pixel-by-pixel in raster scan fashion, meaning left to right and top to bottom. Note that there is no subdivision here. The raster scan does the whole image line by line.

So you see, raster scanning begins at the absolute top-left pixel, scans to the right until the end, goes down one, jumps back to the left, and goes again.

[You don't need to know the following formulas unless you choose to follow along. If you're just reading then you'll be fine without them]

That will take up (width * height) bytes, since each pixel takes one byte. Then we need to store the U and V images. Well, since the width and height of those are 1/2 the dimensions of the B/W image, the data for U and V will each be ((width / 2) * (height / 2)) bytes. Since we must copy U and V like this, that will be 2 * ((width / 2) * (height / 2)) bytes.

So now we have (width * height) + (2 * ((width / 2) * (height / 2)) bytes of data for just one frame. With an image size of 960x540, that comes out to 777,600 bytes total.

So for each frame in our YUV420p RAW video, the first 518,400 bytes will be the B/W image, the next 129,600 bytes will be the U image, and the remaining 129,600 bytes will be the V image.

Understanding YUV420p10le RAW image data

YUV420p only handles values from 0 to 255 because it's an 8-bit format. Since we want to work with 10-bit image data, we need a way to store values from 0 to 1023. This is where YUV420p10le comes in.

Since files are stored as bytes and bytes are multiples of 8 bits, we can't easily store 10-bit values because we'd have a fraction of a byte left over after almost every 10-bit value we write. This is why YUV420p10le stores the 10-bit values inside a larger 16-bit value. Since 16-bit numbers can range from 0 to 65,535 it will easily store our 0-1023 values.

So now we have to store 2 bytes for each value (16 bits = 2 bytes). The file structure stays the same so our file size doubles to 1,555,200 bytes or 1.48 MiB.

By now you may have guessed that having a higher range of available values makes for a higher-quality image. In a normal PC image, for example, 8 bits per color channel times 3 channels (RGB) equals 24 bits per pixel overall, or 24.7 million different colors. But what if you only have 256 colors at your disposal? The image will look quite bad.

(The image in only 256 colors. Click to enlarge and see how bad it is)

Videos encoded with 8-bit YUV420p look great. In fact, it's the bit depth that all YouTube videos are encoded with, even the ones that claim to be HDR which is at least 10-bit. But 10-bit video is better because you can express a far greater range of light and dark. Converting from 8-bit to 10-bit increases the range by simply multiplying the values to bring them up to the 10-bit range, but there will not be any increase in actual quality because you can't get information that was never there to begin with.

If that sounds confusing, let's say you have a gradient of 8-bit pixels: {15, 16, 17, 18, 19}. Now if you wanted to convert them to 10-bit, you would multiply each entry by 4 to get {60, 64, 68, 72, 76}. But notice that you don't have any values in-between whole multiples of 4. If you had recorded the video with a 10-bit camera you could have any value in the 10-bit range (theoretically), but since you converted from 8-bit you will only have the 256 different 8-bit levels spread evenly within 1024 total levels.

When you convert an 8-bit video to 10-bit, this is all you're doing.

This means that in our case the only purpose of converting to 10-bit is so we can have 10-bit values for our VP9 encoder to work with.

Opening RAW videos in a hex editor

Let's see what RAW video data looks like in a hex editor. What you're looking at in these hex screenshots are the pixel values at the beginning of the file, the first few pixels in the top row of the B/W image. These are shades of gray expressed as numbers, but if that doesn't make sense then I'll try to explain.

Here is what the 8-bit image looks like in a hex editor:

On the left we have hex digits and on the right, bytes as they'd be seen in a text editor

And this is the image in 10-bit format:

In this 10-bit example, each pixel value takes up 2 bytes.

There is an annoying quirk you have to watch out for on multi-byte numbers. Most computers read and write them as Little Endian which means byte-reversed.

Let's take the example of 255 which is 0xFF in hex. Hex works by using 0-9 and A-F to make a base-16 number system. 2 hex digits make a byte.

If we have a hex byte of 0xFF (255 decimal) and add 1 to make 256, it would "overflow" and we would get 2 hex bytes: 0x01 0x00. On Windows Vista and up, you can try setting Windows calculator to Programmer and use the Hex mode to enter 0100, then click Dec and it will say 256.

So now that we've established that, let's say you wanted to record that number in a file. You know about place value in the decimal system (tens, hundreds, etc) so you'd expect to just write 0x0100 to your file, right? Well, it turns out that due to a computer quirk carried from the 1970's, the computer would prefer it if you did it in reverse as 0x00 0x01. This applies even to longer hex numbers like 0xDEADBEEF. Each pair of hex digits must be written in reverse order; do not write the single digits in reverse order. 0xDEADBEEF should become 0xEFBEADDE, not 0xFEEBDAED.

Since this weird reverse notation is called Little Endian, it makes sense that the normal order you'd expect to use is called Big Endian. Keep in mind that the bytes (pairs of hex digits) are what's reversed, never bits or single hex digits.

Little Endian is what the LE in YUV420pLE stands for.

So now let's set our hex editor to group by Words (Word = 16 bits).

By default, it's set to Little Endian so these hex bytes are reversed to Big Endian for proper viewing.

Let's press Ctrl+1 or go to View -> Display As -> Decimal. Now you can see that the values are all normal human-readable numbers and that they never exceed 940.

[Random trivia, not necessary to continue] Why don't the values exceed 940 when we were supposed to convert to a 0-1023 range? Because video of photographic subjects (ie not computer screen captures) customarily ranges not from 0-255 or 0-1023, but rather from 16-235 or from 64-940 (16-235 times 4).

Now you can see what I mean by shades of gray expressed as numbers.

No comments:

Post a Comment